Architecture of the Internet and Data Privacy

One day last year, a recommended video popped up on YouTube’s home page. On a screen full of video suggestions tailored to get my attention, this one won. I clicked through and watched the entire video. I was so impressed by it, I even shared it to a personal social media page to encourage others to watch. How terribly ironic, given that this was the video:

This video opened my eyes to surveillance capitalism, the monetization of personal data collected about people through their movements online and in the real world. It is the reason both why I was recommended a video YouTube’s algorithm thought I would like to watch, and how tech companies like Facebook, Google, Amazon, YouTube, and others make their big money.

The structure of the internet today is built for making seamless experiences like this possible. Some see it as convenience, others who think about it more may consider it creepy. Without getting too much into the weeds, it is an eerily sedating combination of the two, and it can result in serious real-life consequences. Algorithmic curation, the invisible process that enabled the recommendation of this video, is one that shapes our entire personal experience of the internet today. Two people may do a search for the same keywords, and depending on their geographic location, IP address, and digital profile (not created by the user, but for the user without their consent), the results that appear will likely be drastically different. Upworthy co-founder Eli Pariser explains more:

This should be a cause for major concern among librarians. Providing access to a wide range of information is both a professional responsibility and a civic duty. How are we to handle the fact that each time a patron does an internet search, or interacts with a post on our social media page, they are (more often than not) unknowingly participating in a profit-making business based upon selling insights about their private life. The internet as it is constructed now is not seeking to provide people with the best information, but the most tailored, and the most profitable for some big tech corporation.

Concerns surrounding internet algorithms include: platforms “listening” across devices or platforms, algorithms and automated decision-making reinforcing qualities, platforms shaping the content and ads that people see, online users not seeing the same reality, platforms selling personal data to third parties, and the permanence of the data being collected about individuals. Whether we like it or not, the entire internet, not just social media, has turned into an aggregator, delivering tailored content right to our fingertips. At the surface level, this looks like harmless convenience. These technologies are designed to be nearly undetectable, after all. We may only notice that they are in play when we see what kinds of ads others we know are receiving as they navigate the internet, or when we get an ad for just the product we were talking about with a friend while our cell phones listened in through our pockets, or any other “smart” device:

Many of us realize at some level that our online search history, social media activity, and even in-person activities are being monitored. This realization can either be largely ignored by individuals, or it can consciously shape the way we interact with different areas of public life, which is now as much digital as it is physical. At a subconscious level, this knowledge makes a huge impact on the kinds of information we seek out, the kinds of in-person activities we participate in, and can even supplant our own thoughts or opinions with those that the internet “recommends” to us based upon siphoned off personal data “breadcrumbs” left by our browsing, viewing, and social activity.

In CNN’s recent special report Inside the QAnon Conspiracy, former DHS counterterrorism official Elizabeth Neumann says, “The way in which [QAnon] went mainstream is they turned these concepts into memes, and things that were very shareable on sites like Facebook and Twitter, that capture people's interest, there's a reason they call it a rabbit hole, right? So the cause, in particular, save the children. They took that slogan and co-opted it and made it feel comfortable for a mainstream person to be like, yes, I want to help save the children. They take one click and learn some things and click another and do some searches. And all of a sudden, they've spent hours and they have gone down this rabbit hole to a place where they've been exposed to some darker theories that are not as simple as the meme they may have initially found.”

The Netflix documentary The Social Dilemma has been a popular entry point into this darker side of connectivity. This may serve as an accessible starting point for beginning the conversation about this nuanced but important topic. There are many who voice the common refrain, “I’ve got nothing to hide, I don’t care what the internet knows about me! I like the personalized ads and relevant content.” They may know what they are receiving, but have no clue what they are giving up. Tech companies have yet to find the limits of using people’s personal data, and those like Zuboff with a pulse on this issue claim that things like health information (data gleaned through smart watches), driving habits (data harvested from insurance monitoring devices) can one day soon be used to deny people vital services like health insurance, car insurance, loans, and more. The things to know is: we aren’t just giving Facebook information about topics we “like” - with every hover, page view, reaction, we give them clues about who we are as a person. We aren’t revealing only our preferences, but our personalities.

All of this information is taken without our consent. We don’t know what our personalized data profiles contain. We don’t know all of the companies that have them. And we sure as heck would have a hard time getting any of these companies to give us our data back. The Fourth Amendment of the U.S. Constitution identifies…

“The right of the people to be secure in their persons, houses, papers, and effects, against unreasonable searches and seizures, shall not be violated, and no warrants shall issue, but upon probable cause, supported by oath or affirmation, and particularly describing the place to be searched, and the persons or things to be seized.”

Compare this with Facebook CEO Mark Zuckerburg’s official motto for the company up until 2014:

“Move fast and break things.”

Indeed, companies moving fast and breaking things have led us to where we are today. We are not powerless, and there is much that can be done about this issue, namely raising awareness of its existence. Libraries can lead programs, host film screenings and discussions, make resource sheets on data privacy, and set a search engine like DuckDuckGo, Ecosia, or StartPage as a default public computer search engine instead of Google. By helping patrons to learn more about not only how they interact with data, but how data interacts with them, we can prepare our communities to face new and existing challenges, and meet the moment head-on.

Resources:

Electronic Frontier Foundation

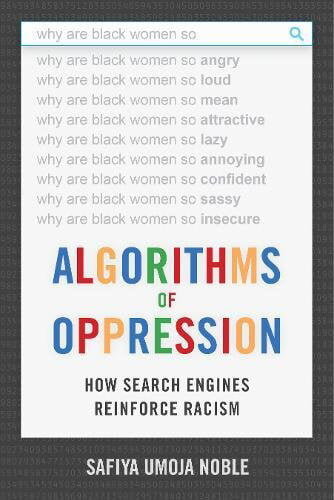

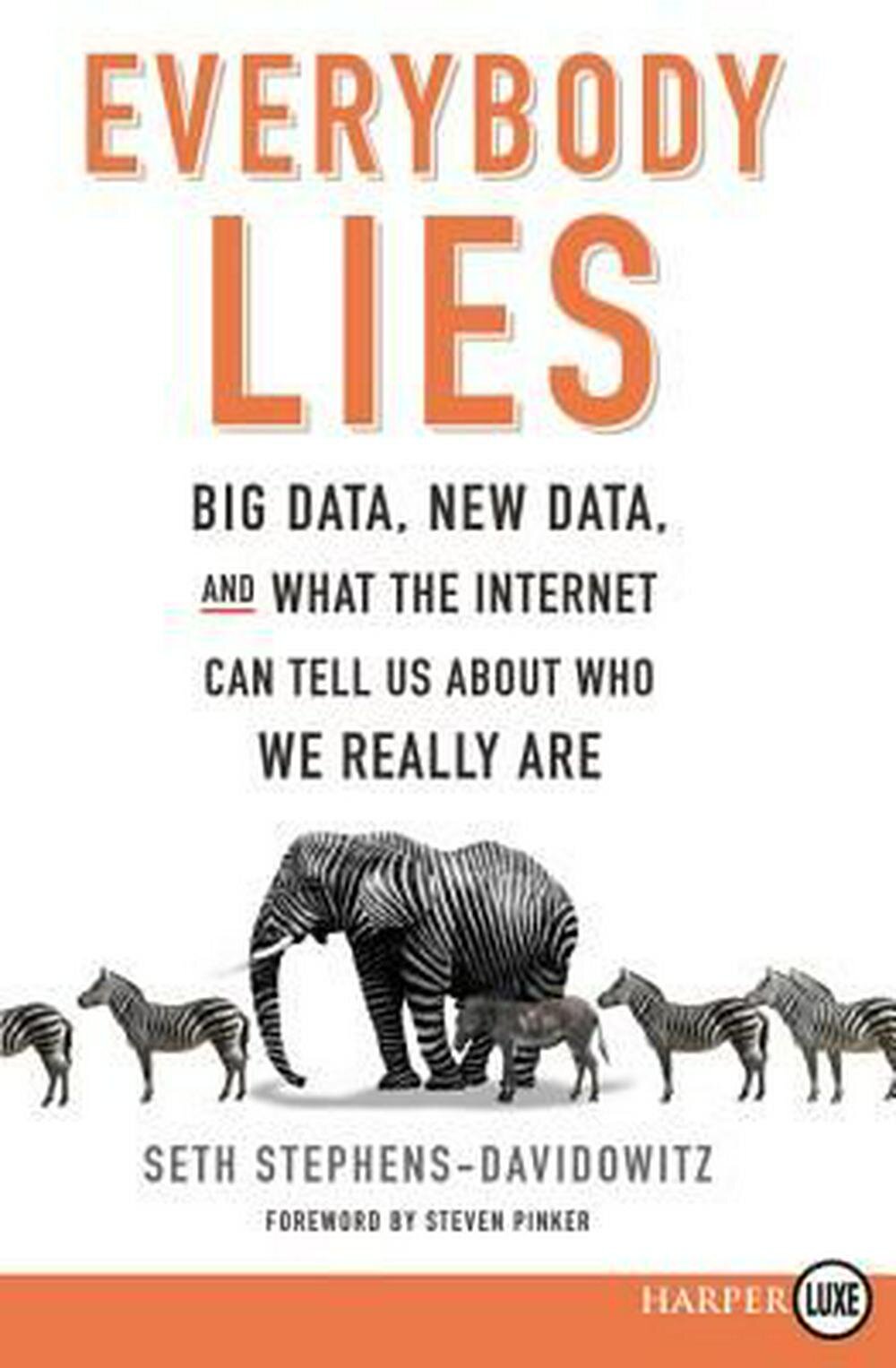

Books (click Right to browse):

This article is informed by the work of Shoshanna Zuboff and Natasha Casey. For more information about the architecture of the internet, check out this recent presentation by Natasha Casey, Professor of Communications at Blackburn College in Carlinville, Illinois.

Main photo by Avi Richards on Unsplash